Web scraping techniques have revolutionized the way we gather and analyze data from the internet. By utilizing powerful libraries like Beautiful Soup in Python, individuals and businesses can effortlessly perform data extraction, unlocking valuable insights hidden within web pages. This process involves HTML parsing to navigate the structure of web content, ensuring that accurate and relevant information is retrieved efficiently. Automated web data retrieval not only saves time but also enhances the ability to stay competitive in data-driven markets. Whether you’re interested in monitoring trends, gathering research, or collecting real-time data, mastering web scraping techniques is essential for leveraging the wealth of information available online.

The art of extracting information from websites, often referred to as web harvesting or screen scraping, plays a crucial role in the modern data landscape. By employing advanced tools and frameworks for automated data collection, practitioners can innovate their processes and optimize their operations. Skills in HTML parsing enable users to dissect complex webpage structures, ensuring they can pinpoint exactly what they need. The significance of automated web data applications extends across various sectors, making it a vital competency for developers, analysts, and marketers alike. As we delve deeper into these powerful methodologies, we will explore practical applications and best practices for achieving effective online data compilation.

Understanding Web Scraping Techniques

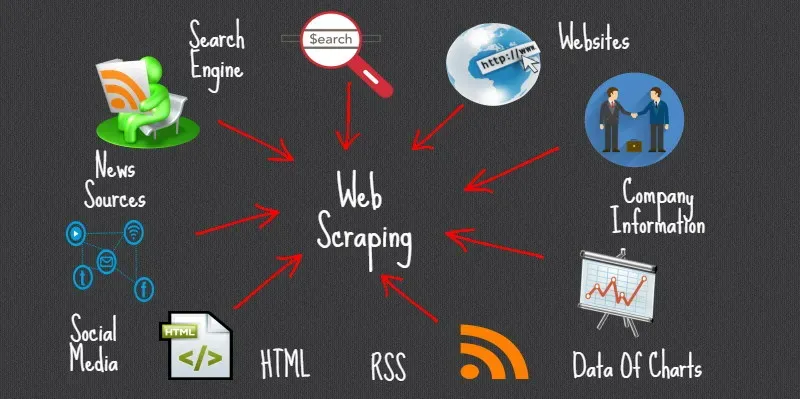

Web scraping techniques involve extracting data from web pages, which can be exceptionally useful for gathering information from various online sources. By implementing automated scripts, you can collect data efficiently from websites, saving time and resources compared to manual data entry. The primary objective is to retrieve structured data from unstructured HTML web pages, enabling you to analyze or store it for later use.

One of the most popular methods of web scraping is using libraries like Beautiful Soup in Python. This powerful library allows you to parse HTML and XML documents easily, simplifying the process of navigating through complex HTML structures. Whether you need to extract specific tags like

,

, or gather attributes from elements, web scraping techniques using Beautiful Soup provide a systematic approach to data extraction.

Getting Started with Beautiful Soup in Python

Beautiful Soup is a Python package designed for HTML and XML parsing, making it an excellent choice for web scraping projects. To get started, you first need to install the library, usually via pip. Once installed, you can use Beautiful Soup in conjunction with other libraries like Requests to download web page content, which can then be parsed. This combination allows for seamless extraction of relevant information without the hassle of manual work.

After scraping content with Requests, Beautiful Soup provides various methods to navigate and search the parse tree. For instance, you can use the find() method to locate specific HTML tags and attributes, such as finding all

tags on a page. This flexibility is crucial for data extraction tasks, where you might want to collect all article texts or titles for further analysis or reporting.

The Importance of HTML Parsing for Data Extraction

HTML parsing is a critical component of web scraping as it helps convert raw web data into a structured format that can be analyzed easily. When you scrape a website, your first step usually involves downloading the page’s HTML content and then parsing it for the specific information you need. With parsing, you can identify and isolate relevant data points such as headings, text, and links that would otherwise be buried within the page.

Using tools like Beautiful Soup allows you to perform HTML parsing seamlessly while ensuring accuracy in the data extraction process. With the ability to traverse the parse tree, you can filter and capture exactly the content you’re interested in, whether it’s product listings, blog posts, or other types of information. This efficiency in parsing directly impacts the quality and usability of the collected data.

Automating Data Extraction from Websites

Automated web data extraction is made possible through a variety of tools and techniques that allow users to set up scripts to scrape websites at regular intervals. This level of automation saves time and minimizes errors compared to manual data collection practices. With libraries like Beautiful Soup, you can create scheduled tasks that will scrape the latest content from your target sites automatically.

By automating your data extraction process, you not only enhance efficiency but also gather more comprehensive datasets over time. This ongoing collection is especially useful in fields like market research or competitive analysis, where keeping up with changes on websites can provide valuable insights. As such, understanding automation in conjunction with web scraping techniques opens doors to more sophisticated data handling practices.

Challenges in Web Scraping and HTML Parsing

While web scraping and HTML parsing can yield valuable information, several challenges can arise during the process. Websites often implement measures to prevent scraping, such as CAPTCHAs or dynamic content loading, which can complicate data extraction efforts. Additionally, web page structures may frequently change, leading to potential breakages in your scraping scripts if they are not regularly updated.

Furthermore, ethical considerations play a crucial role in web scraping. It’s important to abide by a website’s terms of service and ensure that your scraping activities do not violate any rules or regulations. Being aware of the challenges and ethical implications ensures that your web scraping initiatives remain effective and responsible.

Best Practices for Web Data Collection

To optimize your web scraping efforts, adhering to best practices is essential. First, always check the website’s robots.txt file to see what data you’re allowed to scrape. This file provides guidelines on how web crawlers should interact with the site. By respecting these rules, you not only comply with ethical standards but also reduce the risk of your IP being blocked.

Another best practice is implementing user-agent rotation and using proxies to avoid detection when scraping large amounts of data. By masking your scraping activity, you can gather insights without disrupting website functionality. It’s also wise to include delay times between requests to mimic human behavior, further mitigating the potential for being flagged as a bot.

Leveraging Data Extracted for Business Insights

Once data has been successfully extracted via web scraping techniques, the next crucial step is leveraging that data for business insights. Analyzing the scraped data can provide valuable information about market trends, consumer behavior, or competitor activities. These insights can then be used to inform strategic decisions, marketing campaigns, and product development efforts.

For instance, businesses can analyze scraped data from social media to understand public sentiment about their brand or products. Similarly, monitoring competitors’ pricing strategies through web scraping can help businesses adjust their pricing models effectively. In this manner, automated web data extraction serves as a valuable tool for maintaining a competitive edge in today’s fast-paced market.

The Future of Web Scraping and Data Extraction

The future of web scraping and data extraction looks promising as technologies continue to evolve. Advances in artificial intelligence and machine learning are set to improve the accuracy and efficiency of web scraping processes. By integrating AI, scraping tools can learn from previous patterns and adapt to changes in website structures, thus enhancing their data extraction capabilities.

Moreover, as businesses increasingly recognize the importance of data in decision-making, the demand for efficient and effective web scraping solutions will likely grow. This trend indicates a shift towards more sophisticated tools that will not only scrape data but also analyze and interpret it in real-time, creating a robust analytics environment for companies to thrive in.

Frequently Asked Questions

What are the best web scraping techniques to extract data from websites?

Some of the best web scraping techniques include using libraries like Beautiful Soup and Scrapy in Python. These tools allow you to efficiently parse HTML, navigate the DOM, and extract relevant data such as text, links, and images. Automating web data extraction helps in gathering large volumes of information quickly.

How does Beautiful Soup work for HTML parsing in web scraping?

Beautiful Soup is a Python library that simplifies the process of web scraping by parsing HTML and XML documents. It creates a parse tree for parsing and extracting data from HTML files easily. With Beautiful Soup, you can navigate the structure of a webpage and extract information from specific tags or attributes.

What is data extraction in the context of web scraping?

Data extraction in web scraping refers to the process of retrieving specific pieces of information from web pages. This often involves using automated tools to navigate through HTML structures and gather data like product details, news articles, or any other content that is displayed on websites.

What are the key considerations for automated web data scraping?

When implementing automated web data scraping, consider the website’s terms of service, the legality of scraping the data, the frequency of requests to avoid IP bans, and how to handle dynamic content loaded via JavaScript. Additionally, using robust libraries like Beautiful Soup will help manage HTML parsing effectively.

Can I use web scraping techniques to scrape data from dynamically loaded sites?

Yes, web scraping techniques can be applied to scrape data from dynamically loaded sites, but it may require additional tools. For such sites, libraries like Selenium or Scrapy that handle JavaScript rendering are often used. Beautiful Soup can then be employed to parse the rendered HTML for data extraction.

What are some common challenges faced in web scraping with Beautiful Soup?

Challenges in web scraping using Beautiful Soup include handling changes in website structure, managing large datasets efficiently, dealing with CAPTCHAs, and ensuring compliance with anti-scraping measures. Regularly updating your scraping scripts and being respectful of website traffic can mitigate many issues.

| Key Points | Details |

|---|---|

| Web Scraping Techniques | Involves extracting data from websites using various methods. |

| Libraries Used | Python’s Beautiful Soup is commonly used for parsing HTML. |

| Data Extraction | Information such as titles, article text, and keywords can be extracted from HTML tags like

,, etc. |

Summary

Web scraping techniques are essential for collecting data from online sources. By utilizing libraries such as Beautiful Soup in Python, users can effectively parse HTML content and access valuable information including titles, texts, and keywords. This makes web scraping a powerful tool for anyone looking to gather data from the web.