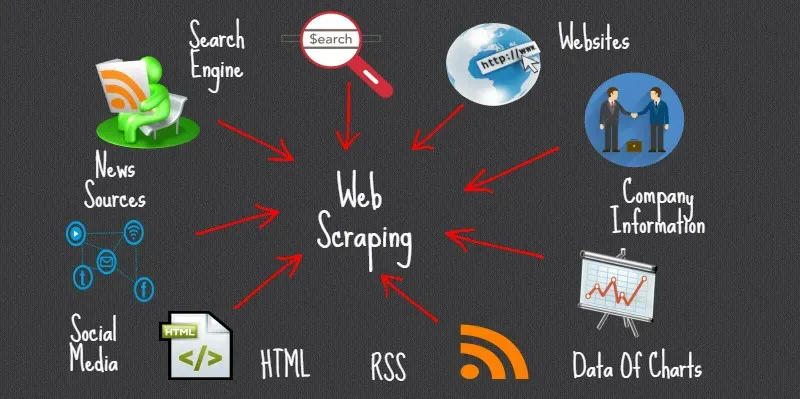

Web scraping techniques are essential for anyone looking to gather data from various websites efficiently. By understanding how to scrape websites, you can automate the process of data collection, saving both time and effort. Whether you’re diving into a Beautiful Soup tutorial or leveraging the Scrapy framework, mastering these data extraction methods is crucial for successful web projects. Furthermore, by implementing web scraping best practices, you’ll ensure that your scraping activities are ethical and compliant with site policies. With the right tools and knowledge, you can unlock a treasure trove of information right from your browser.

When delving into the world of data harvesting from online sources, various methodologies for extracting website data come into play. From automated bots that navigate through layers of HTML to parsing libraries designed for simplicity, these techniques form the backbone of digital information retrieval. By employing tools like Beautiful Soup and the Scrapy framework, you’ll discover efficient ways to parse and organize content. Moreover, understanding the ethics surrounding digital scraping ensures that your practices align with the legalities and guidelines set forth by websites. Embracing smart data extraction strategies not only enhances your projects but also guarantees respect for web resources.

Introduction to Web Scraping Techniques

Web scraping techniques encompass a variety of methods used to extract data from websites. Whether you are extracting simple text or complex data sets, understanding the fundamental principles of web scraping is essential. Common techniques include parsing HTML content, utilizing APIs, and employing libraries designed specifically for scraping tasks. By applying these techniques effectively, you can gather valuable information for research, data analysis, or market intelligence.

In web scraping, the choice of tools can greatly impact the effectiveness of your data extraction. For instance, Python libraries such as Beautiful Soup and Scrapy offer robust frameworks that simplify the scraping process. Beautiful Soup is particularly effective for beginners due to its intuitive syntax and ability to parse HTML and XML documents effortlessly. On the other hand, Scrapy is a more advanced framework designed for larger-scale scraping projects, enabling users to build spiders that crawl through websites and extract structured data.

The importance of web scraping goes beyond mere data collection; it serves as a foundational skill in today’s data-driven landscape. As organizations rely more on data analysis for strategic decision-making, the ability to scrape websites efficiently will continue to be in demand. Understanding the nuances of web scraping techniques can give you a competitive edge in various fields, including marketing, finance, and academic research.

Moreover, having a comprehensive grasp of scraping methods can also help you avoid common pitfalls and legal issues associated with web scraping. While many websites share data openly, others have restrictions that prohibit scraping activities. Knowledge of acceptable web scraping practices ensures compliance with search engines and adheres to ethical guidelines, which is vital for maintaining credibility and fostering trust with stakeholders.

Best Practices for Web Scraping

Adhering to web scraping best practices is crucial for ensuring that your scraping activities are efficient and ethical. One primary guideline is to always check the website’s terms of service before attempting to scrape. Many websites explicitly state their policy on automated data extraction and failure to comply can lead to legal repercussions. Additionally, it’s important to be respectful of the website’s server resources; scraping too aggressively can lead to your IP being blocked.

Another best practice involves implementing proper error handling and data validation methods during scraping. Websites often change their structure, which can lead to broken scripts and missing data. By incorporating robust error handling routines, you can ensure that your scraping process runs smoothly and captures the intended data while gracefully handling unexpected changes. Additionally, testing your scripts in a controlled environment before deploying them can further minimize errors and improve the reliability of your data extraction.

Using tools like Beautiful Soup or Scrapy can not only enhance efficiency but also help in maintaining clean and organized data output. For example, employing Beautiful Soup’s functionality to navigate complex HTML documents allows for precise data extraction. Additionally, utilizing Scrapy’s pipelines can help manage the flow of scraped data, ensuring it is saved in formats like CSV or JSON, which are easy to analyze and manipulate.

Furthermore, implementing user-agents and adhering to rate limits can be beneficial in maintaining a good relationship with the target website. A user-agent string is essential as it identifies your scraping script to the server, and maintaining a reasonable scraping rate helps in minimizing server overload. By being considerate and following best practices, you enhance your web scraping skills while fostering responsible data collection methods.

Getting Started with Beautiful Soup

Beautiful Soup is a powerful Python library that simplifies the process of web scraping. It allows users to parse HTML and XML documents, making it easier to navigate the document tree, search for specific elements, and extract relevant information. To get started, you’ll first need to install Beautiful Soup using pip, ensuring you have the necessary dependencies like lxml or html5lib for parsing. Once installed, you can easily create scripts that fetch web pages and parse their content.

A basic Beautiful Soup tutorial would typically involve fetching a webpage using the `requests` library and then passing the HTML content to Beautiful Soup for parsing. By using methods such as `find()` and `find_all()`, you can efficiently locate specific tags, such as `

` for post titles or `

` for article content. With Beautiful Soup’s intuitive API, extracting data becomes a straightforward process, allowing both new and seasoned developers to automate data collection seamlessly.

Another advantageous feature of Beautiful Soup is its ability to clean up messy HTML or XML documents. In web scraping, you may encounter poorly structured pages that contain unnecessary tags or scripts. Beautiful Soup provides functionalities for navigating the document structure and filtering out unwanted items, ensuring that the final output is useful and relevant. By leveraging these capabilities, you can enhance the quality of your scraped data.

While Beautiful Soup is user-friendly, it is important to remember that it is not a complete solution for every scraping task. For larger scale projects, integrating Beautiful Soup with other libraries can optimize the scraping process further. Utilizing libraries like Scrapy along with Beautiful Soup can help manage scraping workflows and handle large volumes of data more effectively. This combination allows for both detailed data extraction and comprehensive project management, making your scraping endeavors efficient and productive.

Exploring the Scrapy Framework

Scrapy is a robust and versatile framework specifically designed for web scraping at scale. Unlike Beautiful Soup, which is a library focused solely on parsing, Scrapy provides a complete ecosystem for building web crawlers and managing data extraction projects. One of the most compelling features of Scrapy is its ability to handle asynchronous requests, allowing you to scrape multiple pages simultaneously without overwhelming the server, which significantly improves the efficiency of your scraping operations.

To get started with Scrapy, you first need to install it using pip. After the installation, you can create a new Scrapy project in just a few commands. Scrapy’s architecture organizes your code into components such as spiders, items, and pipelines, making it easy to maintain and scale your scraping projects. The spider defines how to crawl a website, the item class represents the data structure you want to extract, and the pipeline processes that data, including cleaning and saving it in the desired format.

One of the advantages of using Scrapy is the built-in support for handling redirects, following links, and managing cookies, which simplifies the scraping process, especially for websites with complex layouts. Additionally, Scrapy comes with a powerful shell that allows developers to test their XPath and CSS selectors interactively, making troubleshooting and debugging much easier. This iterative approach to development can save you significant time and energy when building your scraping applications.

Moreover, Scrapy’s community is very active; numerous plugins and extensions are available to extend the framework’s capabilities. This extensibility allows developers to add functionalities such as CAPTCHA bypassing, automatic retries, and even browser automation. By fully utilizing the Scrapy framework, you can streamline your data extraction processes and build sophisticated web scraping solutions capable of collecting and processing large volumes of data efficiently.

Understanding Data Extraction Methods

Data extraction methods are vital processes in the field of web scraping, referring to how information is gathered from websites. There are several methods, including regular expressions, HTML parsing, and API requests, each serving different purposes depending on the structure of the website and the type of data you want. Understanding these methods allows you to select the most suitable approach for your specific scraping goals.

HTML parsing, for instance, is commonly used when working with web pages, as it allows for precise targeting of HTML elements to extract relevant content. Libraries like Beautiful Soup and Scrapy excel in this area, allowing users to navigate the hierarchical nature of HTML documents easily. Regular expressions can also be combined with these tools for additional flexibility, particularly when dealing with text parsing, where specific patterns need to be identified.

APIs, or application programming interfaces, present another data extraction method that can often be more efficient than scraping web pages directly. Many sites offer APIs that provide structured access to their data, minimizing the need to parse HTML. When available, leveraging APIs can simplify your data extraction efforts significantly and ensure that the data collected is up-to-date and accurate.

Overall, understanding the various data extraction methods will enhance your web scraping capabilities, allowing you to choose the right tool for every situation. Whether you’re scraping articles from news sites, collecting product information from e-commerce platforms, or gathering market data for analysis, the ability to identify and implement the most effective extraction method is essential for successful web scraping.

Legal Considerations in Web Scraping

Web scraping operates in a complex legal landscape, with laws and regulations varying significantly across regions and websites. Thus, it is important to consider the legal implications before initiating any web scraping project. Many websites have terms of service that explicitly outline the conditions under which data may be extracted, and failing to adhere to these can result in your IP being blocked or even legal repercussions.

Additionally, data privacy regulations, such as the GDPR in the European Union or CCPA in California, impose constraints on how personal information can be collected and processed. When scraping content from websites that may include personal data, you must ensure compliance with these regulations to avoid infringements and protect user rights. This consideration not only safeguards you legally but also establishes your credibility as a responsible data collector.

It’s also wise to stay informed about the evolving legal standards regarding web scraping, as case law in this area is continually developing. Several high-profile court cases have set precedents that influence how businesses and developers approach scraping. Familiarizing yourself with these developments can help you adapt your scraping strategies and comply with any new regulations.

In summary, while web scraping offers immense opportunities for data gathering and analysis, navigating the legal landscape requires due diligence. By respecting a website’s terms of service, understanding data privacy laws, and following legal developments, you can engage in web scraping responsibly and beneficially.

Building Your First Scraping Project

Building your first scraping project can be both exciting and daunting. However, by following a structured approach, you can streamline the process and achieve your data extraction goals efficiently. Start with identifying the specific website you want to scrape and the data you’re interested in extracting. From there, familiarize yourself with the site’s structure by inspecting its HTML elements using developer tools in your web browser.

Once you have a clear understanding of the site’s layout, the next step is to choose the appropriate tools for your project. If you’re opting for a Python-based approach, installing libraries like Beautiful Soup or Scapy will be your first task. After setting up your environment, write a simple script to fetch the webpage and parse the HTML data. Begin with straightforward tasks, such as extracting titles or paragraphs, and gradually expand the complexity of your project as you become more comfortable with the libraries.

Another important aspect to consider is how you want to store or process the data once it has been extracted. Many developers choose to save scraped information into CSV, JSON, or a database, especially when handling larger data sets. This planning phase is essential for ensuring that the extracted data is organized and accessible for future analysis.

Finally, as your project evolves, be sure to implement error handling and consider building an iterative testing process. Reviewing your code for bugs and performance issues will not only improve the reliability of your scraping project but also enhance your skills as a developer. By taking a deliberate approach and progressing step-by-step, you can successfully launch your first scraping project with confidence.

The Future of Web Scraping Technologies

The future of web scraping technologies is poised for significant advancements, driven by the growing demand for data in various industries. As businesses increasingly recognize the value of data analysis for strategic decision-making, the tools and techniques for web scraping will continue to evolve. New technologies, such as machine learning and artificial intelligence, are expected to enhance data extraction capabilities, making it possible to scrape and analyze vast quantities of data with greater accuracy and efficiency.

As scraping becomes more prevalent, we can also anticipate the development of more sophisticated tools focused on ensuring compliance with legal and ethical standards. Given the concerns surrounding data privacy and security, future web scraping technologies may integrate more robust features for handling sensitive information and maintaining compliance with regulations like GDPR and CCPA. By incorporating these principles into their design, developers can create tools that facilitate responsible data collection.

Furthermore, the response from website developers to scraping activities will also shape the future landscape. Sites may implement more advanced anti-scraping measures, making it essential for scrapers to adapt and innovate their techniques. This cat-and-mouse game could lead to the development of smarter scraping solutions capable of bypassing obstacles while keeping the ethical implications in mind.

In essence, the future of web scraping technologies is full of potential, with opportunities for improved capabilities, compliance measures, and smarter tools on the horizon. By staying abreast of these developments and adapting to the changing landscape, data enthusiasts and professionals can harness the full potential of web scraping to gain insights and drive innovation in their respective fields.

Frequently Asked Questions

What are the best web scraping techniques for beginners?

For beginners in web scraping, the best techniques include using libraries like Beautiful Soup for parsing HTML and Scrapy framework for more complex data extraction methods. Start with simple tasks such as extracting titles and content from web pages before moving on to automating data collection.

How do I use Beautiful Soup for web scraping?

In this Beautiful Soup tutorial, you’ll first install Beautiful Soup and requests in Python. Then, load a webpage using requests, parse the content with Beautiful Soup, and extract data like post titles and paragraphs by navigating the HTML structure using tags and classes.

What data extraction methods are effective for large websites?

When scraping large websites, data extraction methods such as using the Scrapy framework are highly effective. Scrapy is designed for high-performance and can handle multiple requests asynchronously, making it ideal for extracting large amounts of data efficiently.

What are web scraping best practices to follow?

Web scraping best practices include respecting the website’s robots.txt file, not overwhelming the server with requests, and implementing error handling in your code. Additionally, use a delay between requests to avoid being blocked, and always cite your sources.

How to scrape websites while handling anti-scraping measures?

To scrape websites that have anti-scraping measures, use techniques such as rotating IP addresses, using user-agent rotation, and employing headless browsers. Combining these methods can help bypass restrictions while ensuring your web scraping efforts remain effective.

What is the difference between Beautiful Soup and Scrapy for web scraping?

Beautiful Soup is primarily a library for parsing HTML and XML content and is great for simple projects, whereas Scrapy is a full-fledged framework designed for large-scale web scraping and includes tools for handling requests, data output, and more advanced features.

How can I extract keywords using web scraping techniques?

To extract keywords using web scraping techniques, look for meta tag content within the head section using Beautiful Soup or Scrapy. Additionally, analyze the main article body for frequently occurring terms that may serve as focus keywords to improve your content strategies.

What challenges might I face when learning how to scrape websites?

When learning how to scrape websites, challenges include dealing with dynamic content loaded via JavaScript, understanding website structures and layouts, and navigating anti-scraping technologies. Familiarizing yourself with tools like Scrapy and Beautiful Soup will help mitigate these challenges.

| Key Points |

|---|

**Post Title**: Usually found within `

` tags or meta title tags. |

| **Full Content**: Main article content typically found in `

` tags or specific ` ` containers.

|

| **Keywords**: Look for focus and related keywords in meta tags or the main content. |

| **Spam or Ads**: Assess content for aggressive promotion indicating spam or advertising. |

| **Empty or Junk Content**: Identify if content is meaningful or filled with placeholders. |

Summary

Web scraping techniques involve systematically extracting data from web pages, and understanding the key components to target is essential for effective scraping. For instance, focusing on the post title, content structure, relevant keywords, and identifying unwanted spam or junk content can significantly enhance the relevance and quality of the extracted data. To implement these techniques successfully, utilizing libraries such as Beautiful Soup or Scrapy is recommended, while also ensuring compliance with the website’s terms of service to maintain ethical standards in web scraping.